Software: Python, AWS

This project, which I completed with a teammate, allows users to upload any image of their choosing to our interactive UI, which will send the image to AWS where it will be classified as containing an animal in the image or not containing an animal. If an animal is found in the image, the animal wil be classified by its animal class, if recognized. Once the image is classified through AWS, the Streamlit website will be updated to reflect a bar chart indicating whether or not animal was present, the breakdown animal classes, and Rekognition’s classification of the image (animal or not). To achieve this final product, our team utilized S3, EC2, RDS, Lambda, and Rekognition through AWS as well as Streamlit for the final UI/website. The data for our project are images sourced from an experiment based on raw camera trap images from Katra Laidlaw, an education specialist at the New England Zoo.

Overall, our project aims to achieve three main goals:

Create an interactive user interface that allows users to upload any image(s) of their choosing for classification

Use AWS Rekognition to identify animal presence and/or classification in images programmatically

Provide user with specific information about the animal classification for eventual use in wildlife research and environmental conservation efforts

Our team hopes that our project can provide a starting point for wildlife research groups to automate the identification of species found in their reserves and tailor their conservation efforts to support the safety and comfort of these animals accordingly.

Sample image and Rekognition label

Architecture diagram

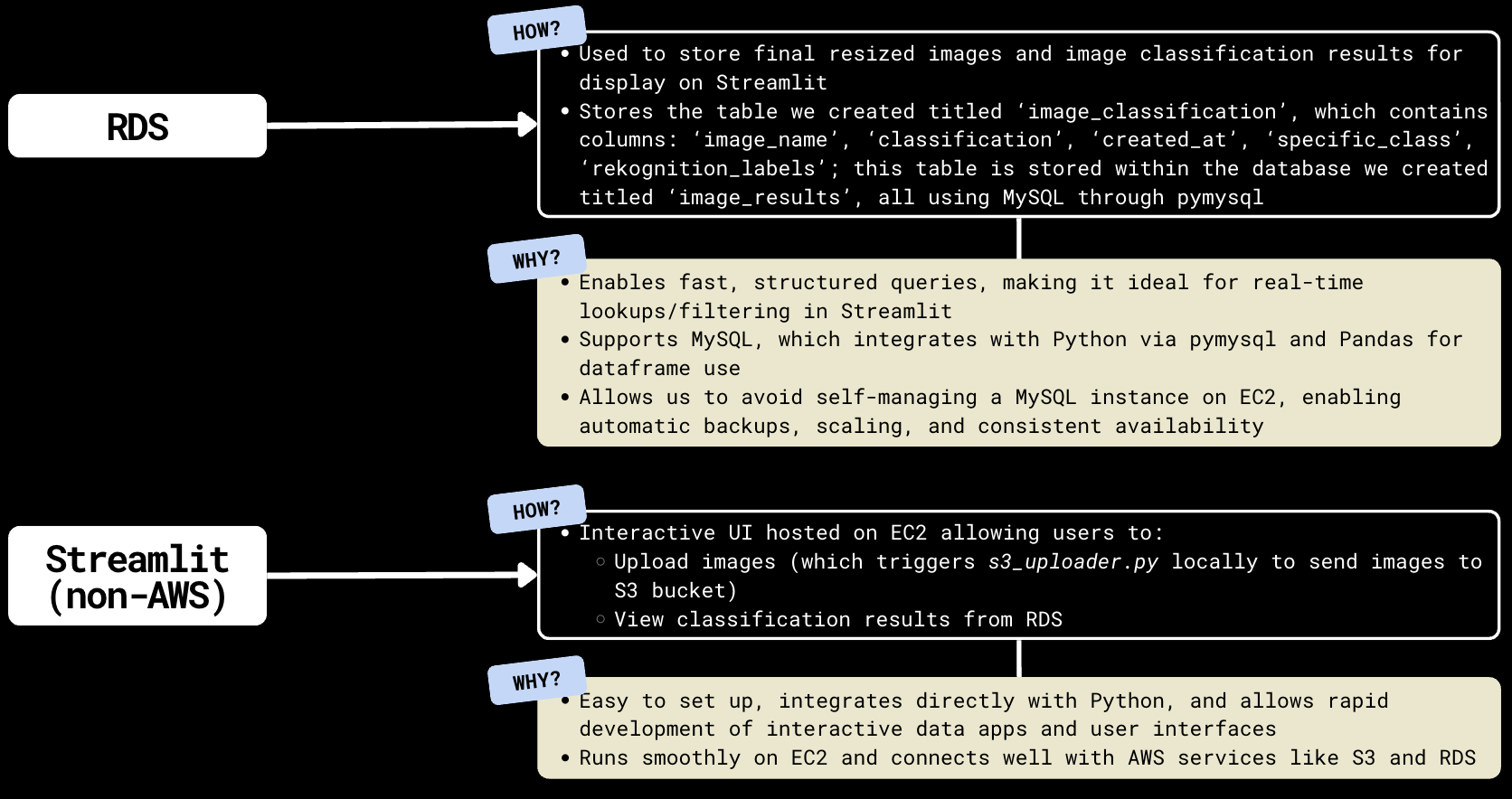

AWS Rekognition

AWS Rekognition was crucial in implementing our pipeline, but this was not an AWS service we reviewed in class

To use Rekognition in our pipeline, we had to research the core functionality of the service, how to integrate calls to Rekognition within Lambda, and how to store classification results to be pulled for use in RDS

2. Local vs. remote execution

In our pipeline, we used both locally-executed scripts (ex: s3_uploader.py) and remotely-executed scripts/cloud-based deployment (ex: process_s3_to_rds.py)

This involved learning/becoming comfortable with command-line operations and SSH via terminal, setting up the correct security settings/permissions in EC2 via IAM, managing remote connections, and understanding how the remote and local servers interact to execute scripts in the correct sequence

3. Streamlit

We used Streamlit to implement our UI/web interface for users to upload data and view image classification results

Since neither of us had ever used Streamlit before, we had to learn both the syntax of Streamlit as well as how to integrate Streamlit with the AWS services used in the pipeline (namely, EC2, S3, and RDS)

Our final Streamlit implementation includes image uploading, connection to RDS, and plotting, which we researched for the project

This project challenged both me and my teammate to apply AWS services we had learned about in class to real-world data, providing very little structure or guidelines. The loose nature of this project made it initially difficult for our team to come up with an idea, but I am proud of our final product and the ways in which it could be used towards aiding wildlife conservation efforts, something both my teammate and I believe is an important cause.

Admittedly, our team struggled to incorporate all of the required AWS services (S3, RDS, EC2, Lambda) because we had very little experience from class to build our code and pipeline from. The same applied to Streamlit, which was the UI recommended by our professor but was new to both of us. Still, this project taught me how to go about learning new programming environments and external services piece-by-piece, making me a better, more ambitious programmer. My teammate and I collaborated really well together, and I was able to improve my teamwork skills in a programming context as well as a result.